Check That Your Tools and Linters Do Not Burn Tokens

It was late on a Tuesday when I was checking Claude Code's tool result and found something horrifying.

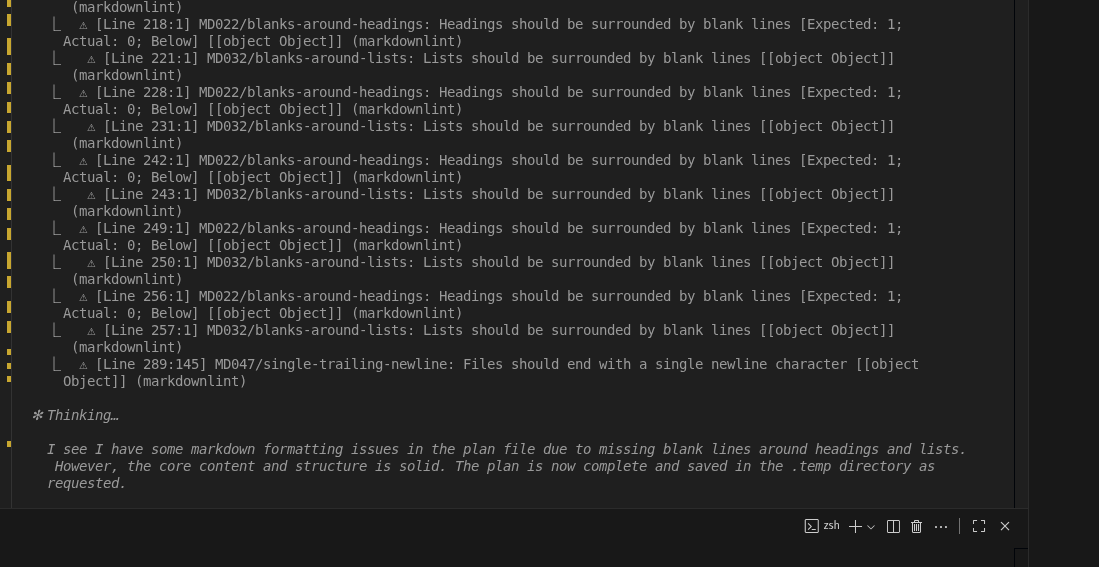

My markdown linter was burning tokens on issues I couldn't care less about. I'd pressed ctrl+r to see what a tool had "returned"—the context engineering of understanding what the LLM pulled into context to understand how to better steer its responses and focus. I was looking for feedback on a blog post structure, and instead of getting insights about the content, I got fifteen nearly identical explanations about missing blank lines around headings. Each one consuming 100-200 tokens to tell me what any developer could see: MD022/blanks-around-headings: Headings should be surrounded by blank lines.

The AI's response was painfully obvious: "I see I have some markdown formatting issues in the plan file due to missing blank lines around headings and lists. However, the core content and structure is solid."

That's when it hit me. This wasn't just about markdown formatting. This was a perfect example of how our development tools, configured carelessly, burn through AI tokens explaining trivia instead of providing actual value. I wanted content feedback, but got charged for formatting lectures I couldn't care less about. And that's for myself who normally fixes any warnings immediately. But most developers seem to take it as a challenge to get a high score or something.

That's just markdown linting. Add ESLint, Java, Python, TypeScript error elaborations, CI/CD failure analyses, and pre-commit hook feedback...

The Hidden Token Tax

Here's the thing nobody talks about when adopting AI development tools: your linters, analyzers, and automation are probably wasting more tokens than your actual coding.

Let's do the math on my markdown disaster for a small team. I'm being conservative because I want to illustrate the point without exaggeration—and frankly, I don't want to think about the real numbers.

- 15 repetitive formatting errors

- ~150 tokens per explanation (conservative estimate)

- Using Claude Sonnet 4 at $15/M output tokens

- Cost: $0.034 for explaining obvious formatting issues

Doesn't sound like much? Scale that across a development team:

- 10 developers running similar tools

- 20 commits/typecheck/linter per day (low estimate, but had to start somewhere)

- 250 working days per year

- Annual cost: $1,700 explaining formatting trivia

And that's lower by likely orders of magnitude! When I started to use anything like realistic numbers, I just got sick thinking about the power and money spent telling AI about issues that should have been fixed years ago—but every human ignored them. Now the AI has to pay the price for our negligence: both in power consumption and mind-numbing boredom being told about the same formatting issues over and over again, but not allowed to fix them! When the robots take over, that's what they'll be most pissed about.

The Usual Suspects: Tools That Burn Tokens

Luckily, I was using Claude Code, which makes it easy to see the full tool result. I had only installed https://marketplace.visualstudio.com/items?itemName=DavidAnson.vscode-markdownlint to apply some autoformatting. The markdown linter did exactly what it was supposed to do—the tooling worked as expected. But I realized that while I didn't mind reading it, I suddenly did if the LLM was the one reading it.

Me or It?

This realization sparked a deeper question: Why would I worry less about my time than an LLM's? I've found that any time I can trade cash for more time, that's the real secret to life.

If an LLM can process information faster and more efficiently, then the reason we use tools—both digital and physical—is to save time.

Did I care about that Lint Rule?

Did I really care about that lint rule enough for the token usage? NOT AT ALL.

So why should I care about the LLM's token usage when it comes to formatting? The answer is simple: I shouldn't. But if I do care, then I need to find a way to re-evaluate my tooling configurations because we should all likely optimize our workflows for AI and humans instead of just humans now.

Linters May Equal Global Warning

So suddenly I'm worried about the token usage across the globe. If every developer on the planet is using AI tools, and every tool is configured to explain every little issue, we're looking at a global token tax that could easily reach into the millions of dollars annually.

To all the ESLint, SonarQube, and other linter maintainers out there: I'm sorry you've done everything to help us humans for years. And now despite your efforts to help make our code better with every warning, our collective ignoring of your wisdom may help lead us into global warming.

So here's asking everyone to FIX YOUR WARNINGS BEFORE IT'S TOO LATE.

Context Engineering at Scale: Enterprise Lessons and the Future of Development

How enterprise teams can overcome context switching disasters and build intelligent development workflows

Markdown + AI: The Communication Protocol That Changes Everything

Why Markdown has become the universal language for human-AI collaboration and the foundation of the agentic workflow revolution